Parents across the UK are being urged to stay vigilant this festive season as a sophisticated new scam uses artificial intelligence to clone a child's voice and trick families out of money.

The Mechanics of the AI Voice Clone Scam

Security experts have issued a stark warning about fraudsters who are now using freely available AI technology to create convincing replicas of a loved one's voice. The scam typically involves a distressing voicemail or call, seemingly from a child, pleading for urgent financial help due to an emergency.

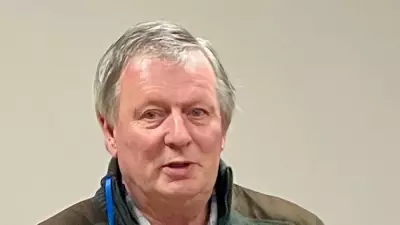

Oliver Devane, a senior researcher at the cybersecurity firm McAfee, confirms the alarming ease with which these clones can be made. "Having tested some of the free and paid AI voice cloning tools online, we found in one instance, that just three seconds of audio was needed to produce a good match," he stated.

Criminals often harvest the short audio clips required from public social media profiles, where families innocently post videos, messages, and updates about their lives and travels.

How to Spot and Stop the Fraud

The emotional manipulation is the scam's core weapon. "The cybercriminal is betting on a loved one or family member becoming worried, letting emotions take over, and sending money to help," Devane explains. He advises potential victims to "try to remain level-headed and pause before you take any next steps."

If you receive a suspicious plea for help, your first action should be to directly contact the person who supposedly called. Use a known, trusted phone number, or reach out through another family member or friend to verify their safety.

Another major red flag is the method of payment requested. Scammers frequently demand untraceable transactions. "If the caller says to wire money, send cryptocurrency, or buy gift cards and give them the card numbers and PINs, those could be signs of a scam," warns the Federal Trade Commission.

Protecting Your Family from Digital Deception

This warning comes ahead of the Christmas period, a time when people may be more emotionally vulnerable and financially active. The key defence is awareness and verification.

Devane stresses that criminals prey on high emotions and the powerful connection to a loved one, deliberately creating a false sense of urgency to provoke a rash reaction. Taking a moment to verify the story through a separate channel can prevent significant financial loss.

Families are also advised to review their social media privacy settings and be mindful of what personal audio and video content they share publicly online, as this can be the raw material for such AI-powered fraud.